Fujitsu Releases “Interstage Big Data Parallel Processing Server V1.0” to Help Enterprises Utilize Big Data

March 2, 2012 No CommentsSOURCE: Fujitsu

Reliability and processing performance enhanced with Apache Hadoop solution featuring Fujitsu’s proprietary distributed file system

Tokyo, February 27, 2012 — Fujitsu today announced the development and immediate availability of Interstage Big Data Parallel Processing Server V1.0, a software package that substantially raises reliability and processing performance. These enhancements are made possible by using Apache Hadoop(1) open source software (OSS)—featuring Fujitsu’s proprietary distributed file system—for parallel distributed processing of big data. The new software package has the added benefit of quick deployment.

By combining Apache Hadoop with Fujitsu’s proprietary distributed file system, which has a strong track record in mission-critical enterprise systems, the new solution allows for improved data integrity, while at the same time obviates the need for transferring data to Hadoop processing servers, thereby enabling substantial improvements in processing performance. Moreover, the new server software uses a Smart Set-up feature based on Fujitsu’s smart software technology(2), making system deployments quick and easy.

Fujitsu will support companies in their efforts to leverage big data by offering services that assist with deployment, including for Apache Hadoop deployment, and other support services.

In addition to being large in volume, data collected from various sensors and smartphones, tablets and other smart devices comes in a wide range of formats and structures, and it also accumulates rapidly. Apache Hadoop, an OSS that performs distributed processing of large volumes of unstructured data, is considered to be the industry standard for big data processing.

The new software package, based on the latest Apache Hadoop 1.0.0, brings together Fujitsu’s proprietary technologies to enable enhanced reliability and processing performance while also shortening deployment times. This helps support the use of big data in enterprise systems.

Product Features

1. Features a proprietary distributed file system for high reliability and performance

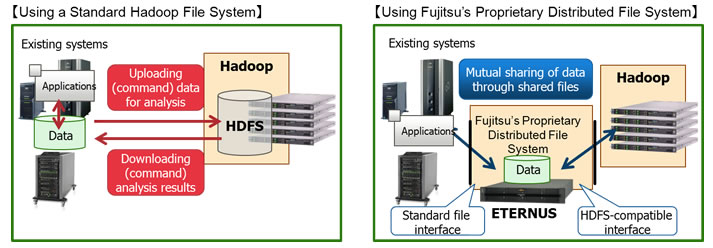

In addition to the standard Hadoop Distributed File System (HDFS), the new solution features Fujitsu’s proprietary distributed file system, which boasts a strong track record in mission-critical enterprise systems. This, in turn, enables high reliability and performance.

- Improved file system reliability

With Fujitsu’s proprietary distributed file system, Apache Hadoop’s single point of failure can be resolved through redundant operations using a master server(3) that employs Fujitsu cluster technology, thereby enabling high reliability. Moreover, storing data in the storage system also improves data reliability. - Boosts processing performance by obviating need for data transfer to Hadoop processing servers

With Fujitsu’s proprietary distributed file system, when processing data using Hadoop, processing can be performed by directly accessing data stored in the storage system. Unlike the standard Apache Hadoop format, which temporarily transfers data to be used to HDFS before processing, Fujitsu’s software obviates the need transfer data to substantially reduce processing time. - Existing tools can be used without modification

In addition to an HDFS-compatible interface, an interface with the data file system supports the standard Linux interface. This means that users can employ existing tools for back-up, printing and other purposes, without modification.

2. Smart Set-up for quick and easy system deployment

The new server software uses a Smart Set-up feature based on Fujitsu’s smart software technology, thereby making deployment quick and easy. The system can automatically install and configure a pre-constructed system image on multiple servers at one time for quick system and server deployment.

Product Pricing and Availability

| Product | Price (excl. tax) | Availability |

|---|---|---|

| Interstage Big Data Parallel Processing Server Standard Edition V1.0 (Processor license) | From 600,000 JPY | End of April 2012 |

* Pricing and availability indicated are for Japan, and those for other regions may vary.

Glossary and Notes

- 1 Apache Hadoop:

- Developed and released by the Apache Software Foundation (ASF), Apache Hadoop is an open-source framework for efficiently performing distributed parallel processing of massive volumes of data.

- 2 Smart software technology:

- Fujitsu’s proprietary technology that evaluates its own hardware and software environment and optimizes for easy and worry-free use.

- 3 Master server:

- In Hadoop, a master server oversees distributed processing while controlling servers that do the actual data processing.

About Fujitsu

Fujitsu is the leading Japanese information and communication technology (ICT) company offering a full range of technology products, solutions and services. Over 170,000 Fujitsu people support customers in more than 100 countries. We use our experience and the power of ICT to shape the future of society with our customers. Fujitsu Limited (TSE:6702) reported consolidated revenues of 4.5 trillion yen (US$55 billion) for the fiscal year ended March 31, 2011. For more information, please see http://www.fujitsu.com

Press Contacts

Fujitsu Limited

Public and Investor Relations Division

Inside the Briefcase

Inside the Briefcase